You’ve probably heard the term “machine learning” tossed around in meetings, articles, or tech talks, often alongside buzzwords like AI, automation, or deep learning.

What does it really mean, and why is it everywhere?

At a surface level, the concept sounds simple enough: it’s a way for computers to learn from data.

Dig deeper, and you’ll discover it’s driving everything from personalised recommendations to real-time fraud detection.

It’s a technology that’s changing how modern systems adapt, make decisions, and grow.

What is Machine learning?

Machine learning (ML) is a method that enables computers to learn from data, recognise patterns, and make decisions without being manually programmed for every single task.

Nowadays, you can find it everywhere:

Netflix’s recommendation engine

Gmail’s spam filtering

Chase Bank’s detection of unusual spending activity

Working quietly in the background, ML systems constantly adjust and improve based on the data they receive. This naturally raises the question: how do machines actually learn?

In short, they learn by example. The more training data an ML model has, the better it is at spotting patterns.

However, the answer depends on context, especially when it comes to the difference between machine learning and its more specialised subset, deep learning.

Deep learning vs. Machine learning

It’s easy to confuse deep learning with machine learning, especially for those not working directly with artificial intelligence.

Machine learning is a broad field focused on enabling computers to learn from data.

Deep learning is a subfield within ML, inspired by the structure of the human brain. It relies on layers of artificial “neurons” to process complex information, making it especially effective for tasks like image recognition and speech processing.

An easier way to understand the difference is to imagine ML as a toolbox. Deep learning is one of the tools.

How algorithms learn: The human analogy

In a way, machine learning happens very similar to how humans learn. To start, we can draw direct comparisons:

Data is like experience. The more experience a person has, the more knowledge they accumulate.

Algorithms are like a brain. Just as the brain interprets and makes sense of experiences, algorithms analyse data to find patterns.

The model is like a memory. Humans store what they’ve learned in memory, and ML models store patterns derived from data.

Machines also learn from feedback, just as humans do when they receive correction or guidance.

For example, if a child points to a picture of an animal and hears its name, they learn to associate the picture with a name. Similarly, machines learn to associate data with labels.

There are four main types of machine learning algorithms.

Supervised learning

At its core, supervised learning is like learning with a teacher.

The algorithm is given data along with the correct answers, known as labels. It tries to predict those answers, learns from its mistakes, and improves over time.

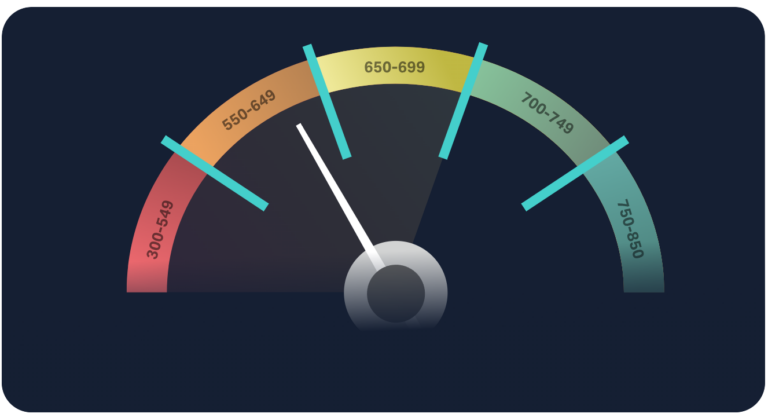

A good example comes from Zest AI, which builds credit scoring models for lenders.

These models are trained on historical loan data labelled with outcomes like “paid in full” or “defaulted.”

By learning patterns linked to good and bad borrowers, the system can assess creditworthiness more accurately than traditional scorecards.

Unsupervised learning

If supervised learning is like having a teacher, then unsupervised learning is like figuring things out on your own.

The algorithm receives only the data, without any labels, and tries to identify patterns or structure.

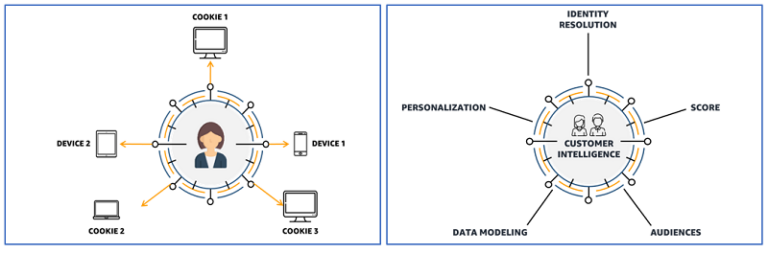

For example, Amazon uses techniques like clustering to group customers based on behavioural data, such as purchase history, browsing habits, and transaction timing.

These insights enable the system to deliver more targeted recommendations and promotions without relying on predefined customer categories.

Semi-supervised learning

Semi-supervised learning is a mixture of both previous methods.

In this approach, only a small portion of the data is labelled while the rest remains unlabelled. The algorithm uses the labelled examples to make sense of the unlabelled data.

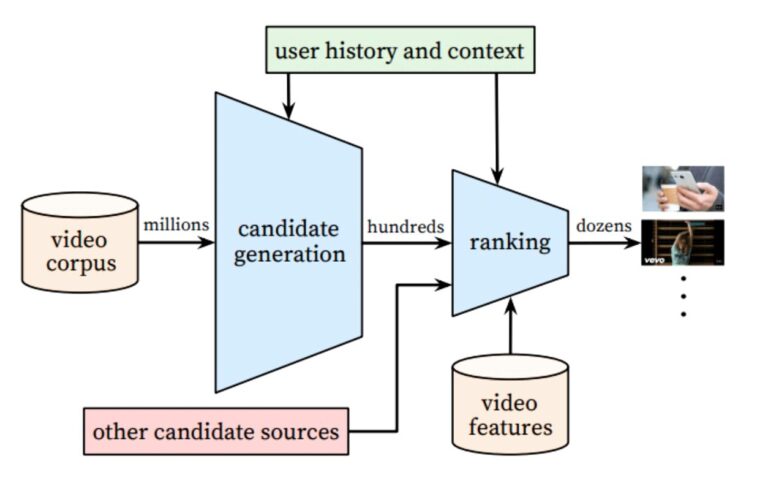

For example, researchers at Google developed a system called LEAS (Local Expansion at Scale) to identify fake engagements, such as likes, comments, and subscriptions on YouTube.

They started with a small set of known “seed” spam accounts and then applied a semi-supervised clustering algorithm to propagate labels to other users showing similar behaviour in the engagement graph.

Reinforcement learning

Often described as the “trial and error” method, it involves an algorithm that takes actions, receives rewards or penalties based on those actions, and gradually learns to make better decisions.

Some Boston Dynamics robots use reinforcement learning to refine their navigation and movement. Each successful step, turn, or balance adjustment results in a reward signal.

Over time, the robot learns to stay balanced and how to move efficiently in dynamic environments.

Why machine learning matters (and why you should care)

Machine learning impacts our everyday life by supporting the decision-making process in multiple industries, for example:

Healthcare: Diagnosing diseases, predicting patient outcomes

Finance: Risk scoring, fraud detection, and credit approvals

Hiring: Resume screening, skill matching, and candidate ranking

However, there’s a catch: these systems don’t operate in a vacuum. Machine learning models are not neutral. They reflect the data they’re trained on. This is both their greatest strength and their biggest weakness.

If the training data is biased, outdated, or incomplete, the model’s predictions will be too. That means real-world decisions, like who gets a loan, what treatment a patient receives, or who gets invited to an interview, may be skewed or unfair.

When building your own ML model, ask:

Where did the training data come from?

What decisions is the model influencing?

Is there a human in the loop?

Ethics, fairness, and transparency aren’t just an afterthought. These principles are at the core of proper ML model use. Angie Beasley, associate professor at the University of Texas at Austin, explains this importance in a TED Talk.

Final words

As with any powerful tool, using technology responsibly starts with understanding how it works. Machine learning is based on data, math, and a lot of fine-tuning. The quality of that data directly shapes the quality of the output.

The same principle holds true for humans: if we learn from bad information, we form false assumptions.

It’s easy to assume that understanding how ML works is not needed if you’re not a developer, but that mindset is risky.

Machine learning is everywhere. It influences our daily lives through what we see, buy, and even the decisions we make.

You don’t need to be an expert. However, you do need to be informed because this is how you stay in control.

FAQ

At its core, a machine learning algorithm learns by learning from mistakes and updating parameters to make more accurate predictions or decisions based on examples.

It’s like a feedback-driven trial-and-error process: the algorithm guesses, checks how far off it was, then tweaks itself to do better next time.

Yes, their only source of learning is the data they’re given.

Algorithms don’t “understand” context or meaning. They just analyse the data and try to map inputs to outputs. If the data is noisy, biased, or incomplete, the model will reflect those problems.

Yes, but it takes discipline and clarity on what you want to achieve.

Many people teach themselves ML through free courses, books, and hands-on projects. Keep in mind that only theory isn’t enough. You need to apply what you learn to real problems, even small ones.

Training involves feeding an algorithm a large amount of labelled or structured data and asking it to make predictions. Each time it gets something wrong, an internal mechanism (like gradient descent) adjusts the model’s internal parameters.

Over time, these adjustments lead to a model that performs well on similar data it hasn’t seen before.