Nowadays, it seems that everyone has a stance on artificial intelligence, whether informed or not. Early debates around AI’s potential were valuable, but today, the conversation is often stuck in speculation and misinformation.

Companies often feel pressure to adopt AI and rush the process, ignoring the potential consequences. When expectations aren’t met, they may dismiss artificial intelligence as overhyped or useless.

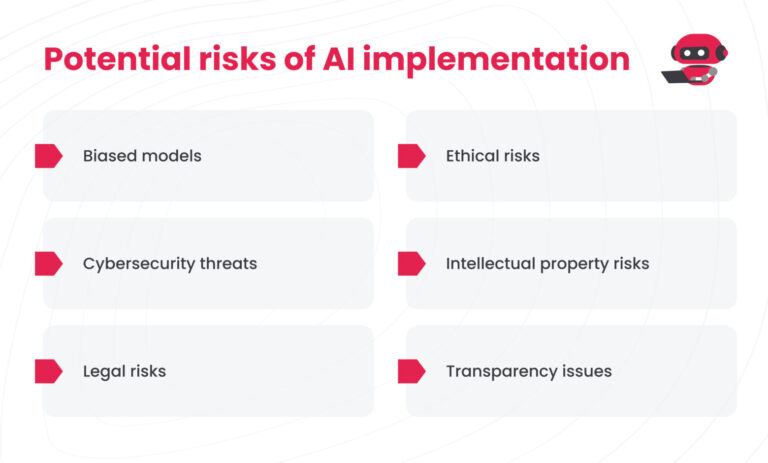

Understanding, identifying, and mitigating the risks can mean the difference between meaningful progress and costly setbacks.

Dangers of rushing AI adoption

Some might think of AI project success as a gamble — you either beat the odds or join the 85% of AI projects that fail. The reality is, even the most promising projects may collapse when strategy, data quality, or expectations aren’t handled properly.

Rushing AI adoption may lead to many issues that could’ve been avoided.

Biased model

Bias is one of the most prominent issues in AI adoption. Cleaning biased data can be repetitive, but easy; dealing with biased models, on the other hand, requires deep analysis and careful adjustment.

Sometimes, bias is unintentionally embedded in the design itself. Developers, knowingly or not, bake their assumptions into models through how they define goals or set up the training process.

A good example of how it looks in the real world is Amazon’s AI recruitment tool. The model learned to favour male candidates because it was trained on a decade’s worth of resumes, which were primarily from men. As a result, it penalised resumes that included the word “women” or referenced all-women colleges.

Since AI models can find patterns and optimise according to them, they can unintentionally become unfair. Amazon’s AI model picked up on historical hiring patterns that favoured men, which led it to downgrade resumes from women.

Sometimes, the bias stems from the training goal. For example, if the model’s objective is to maximise clicks, it might favour controversial or polarising content. As one researcher from the University of Central Florida put it, such biases ”have far-reaching effects, limiting media diversity in the process by hurting not only consumers but also content providers from marginalised communities.”

See also: Implementing AI: Is it worth it? A step-by-step guide to AI implementation

Cybersecurity threats

AI systems deal with a lot of industry-related sensitive data, making them a prime target for malicious actors. Beyond traditional vulnerabilities, AI introduces new security risks tied to how models are trained, deployed, and accessed.

The most prominent threat to AI is adversarial attacks. The aim is simple: feed the model subtly manipulated inputs designed to trick it into making incorrect predictions. For example, altered images can mislead computer vision systems, and small changes in input data can cause self-driving cars to misinterpret road signs.

While fooling the model can still have devastating effects, model theft creates an even bigger issue. Methods like reverse-engineering or abusing public APIs can help attackers replicate proprietary algorithms. Model theft opens the door to replicating and deploying flawed or unsafe versions of the technology elsewhere.

Legal risks

Nowadays, we’re still in a “Wild West” era of AI. In most parts of the world, regulations have not kept pace with rapid development, leaving serious gaps in accountability and liability.

AI Act and frameworks like GDPR are steps toward structure, but the overall legal environment remains fragmented and difficult to navigate. The uncertainty becomes especially risky when AI is used in sensitive contexts like hiring, credit scoring, or medical decision-making, where mistakes can violate individual rights.

Missteps can lead to regulatory scrutiny and hefty fines. Failing to stay aligned with evolving laws is one of the most common mistakes businesses make when deploying AI systems.

Ethical risks

AI shouldn’t be used for everything. For example, tasks like monitoring employee productivity, screening candidates, or personalising pricing strategies may be done by AI, but they raise serious ethical concerns.

Even when these practices are legal, the lack of clear regulation leaves room for decisions that undermine trust. If AI feels intrusive, discriminatory, or exploitative, businesses may risk backlash from both employees and customers.

Ethical AI use extends to business values, not only current laws and regulations. Businesses that fail to see this risk damaging their reputation and losing people.

Intellectual property risks

In terms of intellectual property (IP), AI is in the grey area. Using pre-trained AI models or third-party APIs raises a lot of questions about who owns the resulting output. As one study summarised the issue, “Traditional IP laws, designed to protect human-generated works and inventions, are increasingly inadequate in addressing the complexities introduced by AI.” Issues around authorship, ownership, and the legal status of AI-generated content remain unresolved, and this legal uncertainty presents a growing risk.

There’s no clear answer to this question but ignoring it can be costly. Businesses should carefully review the licensing terms of any AI tools they use and understand the source of data they use, especially in industries where IP is a core asset.

Transparency issues

With some AI models, especially deep learning systems, explaining the decision-making process is incredibly difficult. The reasoning is often buried in layers of statistical correlations, leaving users and stakeholders in the dark.

The problem becomes even bigger for industries like healthcare or finance, where decisions must be justified. Efforts to develop explainable AI (XAI) have made progress, but most current methods only provide rough approximations of how a model “might” be thinking, not a true explanation.

The consequences are already visible: trust in AI has declined by 8% globally over the last five years, and by 15% in the U.S. The lack of transparent systems leads to a lack of confidence in widespread adoption.

See also: The rise of AI-native technologies

AI risk management

A proper AI implementation requires oversight and continuous evaluation. This is a core idea of AI risk management.

Being part of AI governance, risk management focuses specifically on addressing vulnerabilities and threats an AI model might encounter. SANS Institute have given an extensive explanation of AI risk management at the AI cybersecurity summit.

One of the most powerful tools in AI risk management is the AI audit. Simply put, it’s a formal review of the system: the data sources, model behaviour, fairness, and alignment with ethical standards.

Regular audits help detect biases, flag security issues, and identify compliance gaps.

You might think that risk management is needed only during the development of an AI model, but it’s far from the truth. Models tend to drift over time due to the evolution of data and user behaviour. Constant monitoring is a must.

An unchecked AI model, no matter how well-trained it is, can start producing flawed or biased results in changing environments.

AI safety considerations: 5 tips to keep you safe

Reducing AI risks is all about awareness and building robust systems. Here’s what you need to do to ensure your model works as intended.

Data quality assurance

Clean, reliable data is the foundation of any successful artificial intelligence system. No matter how advanced the model architecture, its output will only be as good as the data it was trained on.

Poor data quality is one of the most common reasons for flawed AI behaviour. Issues like missing labels, duplicate records, corrupted files, and imbalanced representation can harm the training results.

To ensure data quality, it should be governed. Always verify that datasets are sourced legally and ethically, especially in cases where data is scraped or sourced from third parties. Regular audits of your data pipelines can help detect anomalies early, reducing the risk of faulty or unfair results.

Rigorous testing and model validation

AI model can perform well with benchmark datasets, but struggle with real-world tasks. To see the real performance, test the model on real-world scenarios and edge cases.

Beyond overall accuracy, focus on a broader range of metrics like precision, recall, and fairness across different data subgroups. This helps uncover hidden biases that might not surface in aggregate results.

Validation is a continuous process in the quality assurance cycle. Continuous monitoring and re-evaluation ensure that the model adapts to changing data and remains reliable over time.

Adherence to ethical guidelines and principles

In still unregulated AI industry, ethical compliance is not only reputation management, but also a long-term advantage. Companies that take ethics seriously have more trust from users, regulators, and partners.

Follow frameworks such as the OECD’s AI principles or the EU’s AI Ethics Guidelines to have a clear baseline. Use them to guide your approach, document your decision-making processes, consider the impact on all stakeholders, and account for possible unintended consequences.

Implementing cybersecurity measures

AI systems should be held to the same — or higher — security standards as other critical software. That means encrypting data both in transit and at rest, enforcing strict access controls, and using anomaly detection to identify suspicious behaviour early.

A common misconception is that hosting an artificial intelligence model on a secure cloud makes it inherently safe. In reality, no system is immune to threats, and AI models—especially those with access to sensitive data—should be treated as high-value targets.

Compliance with legal and regulatory requirements

Stay ahead of regulations, not behind them. Monitor where and how artificial intelligence is used, how it treats personal data, and how it handles decisions with legal consequences.

Ensure compliance with the EU’s AI Act or the US’s AI legislation, depending on where you operate, assign compliance leads, and document processes.

Final words

AI implementation can either push businesses to new heights or become a source of setbacks and frustration. The difference is in preparation for AI adoption, understanding the risks, and knowing how to manage them.

AI models can and are valuable tools, but they require proper care. Most of the risks don’t even come from the technology itself; they stem from how people design, deploy, and monitor it.

Handle artificial intelligence with care, and it can deliver real value without the hidden costs.

How Altamira can help

If you’re looking to add powerful AI tools to your business, ensure AI readiness, or just take your products and services to the next level, our stress-free approach will help you achieve your goals.

Zero headaches: A decade-long experience and proven track record of pushing the boundaries of what’s possible with AI.

Tangible value: Our commitment to your project starts even before a contract begins, with prototypes that help you visualise and test concepts.

Transparent communication: Know everything about your project with daily or weekly updates.

Get in touch, and bring your AI-powered ideas to life.

FAQ

Start with humans in the loop. AI systems should support decisions, not make them in isolation, especially in areas that directly affect people. Regular audits, diverse training data, and clear accountability are key. If no one knows how an artificial intelligence makes a decision, that’s already a red flag.

AI can be efficient, but it’s not always accurate. It might misunderstand what customers are asking, give incorrect information, or escalate minor issues. If the model’s training data wasn’t properly checked, the model could reflect or amplify biases. Heavy reliance on artificial intelligence could lead to losing the customer’s trust.

It depends on how it’s used. When done right, artificial intelligence speeds up response times and solves simple issues quickly. But when done poorly, it frustrates people, especially when they feel like they’re talking to a wall.

People often assume that artificial intelligence is always correct or neutral, which is not true. Biased models, flawed logic, and inability to notice and address nuances are the reasons why human quality and sanity checks are essential. The biggest risk isn’t the tech itself; it’s how some people rely on it blindly.