Table of Contents

Though GPT-3 as a technology is a couple of years old, it raised another round of attention in November. Right after OpenAI, GPT-3 developers, announced a breakthrough ChatGPT. If you’ve missed all the fuss for any reason, we’ll shortly describe what it is and why everyone talks about it.

Yet, our primary focus today will be on GPT-3 alternative solutions you can use for free. So, let’s start with the basics and proceed to the overview of open-source analogs for this buzzy technology.

What Is GPT-3?

The GPT-3 model (short for Generative Pretrained Transformer) is an artificial intelligence model that can produce literally any kind of human-like copy. GPT-3 has already “tried its hand” at poetry, emails, translations, tweets, and even coding. To generate any type of content, it needs only a tiny prompt to set the topic.

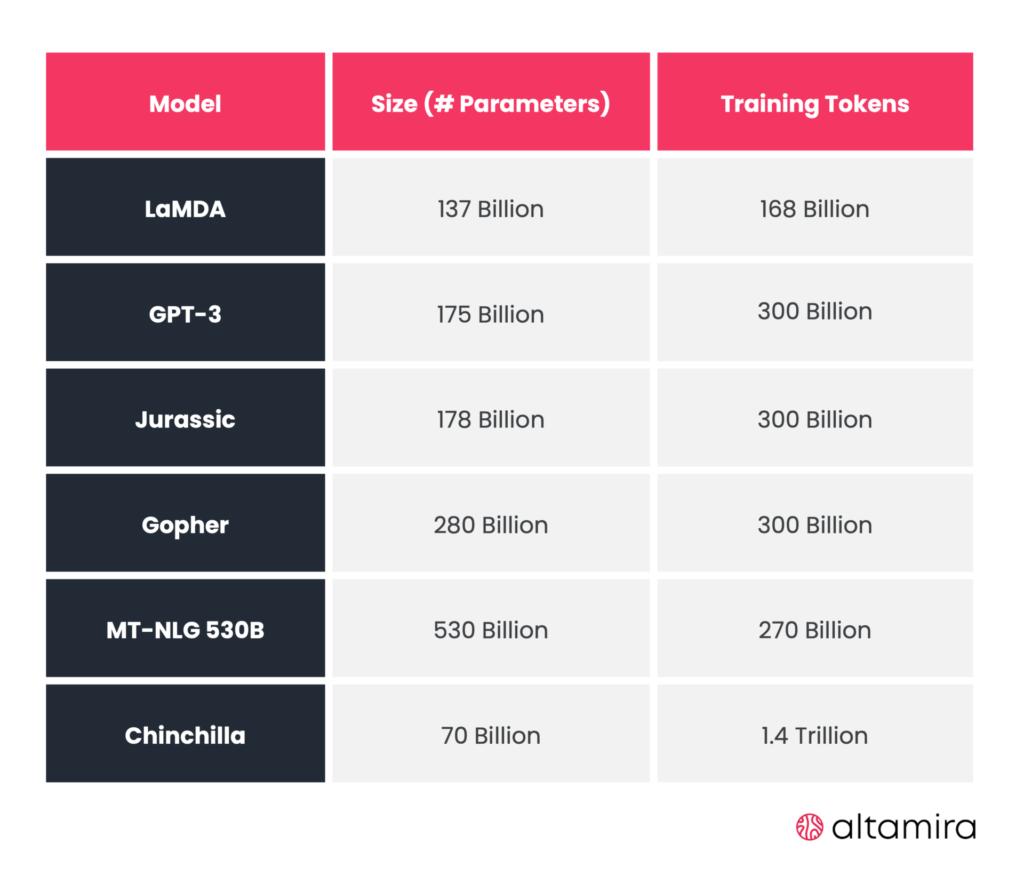

In fact, GPT-3 is a large language model (LLM) or complex neural network in other words, with over 175 billion parameters. It has been trained on a huge amount of information from across the internet (and by “huge” we mean approximately 700 GB of data).

GPT-3 freelance developers are available for hire to utilize this language model for building diverse tools and applications, which provides an opportunity for everyone to create with GPT-3.

What Is ChatGPT?

Top 9 Free GPT-3 Alternative AI models

Now that you’ve got an idea of what is the technology we are talking about, let’s move on to the OpenAI GPT-3 competitors.

OPT by Meta

By sharing OPT-175B, we aim to help advance research in responsible large language model development and exemplify the values of transparency and openness in the field.

AlexaTM by Amazon

GPT-J and GPT-NeoX by EleutherAI

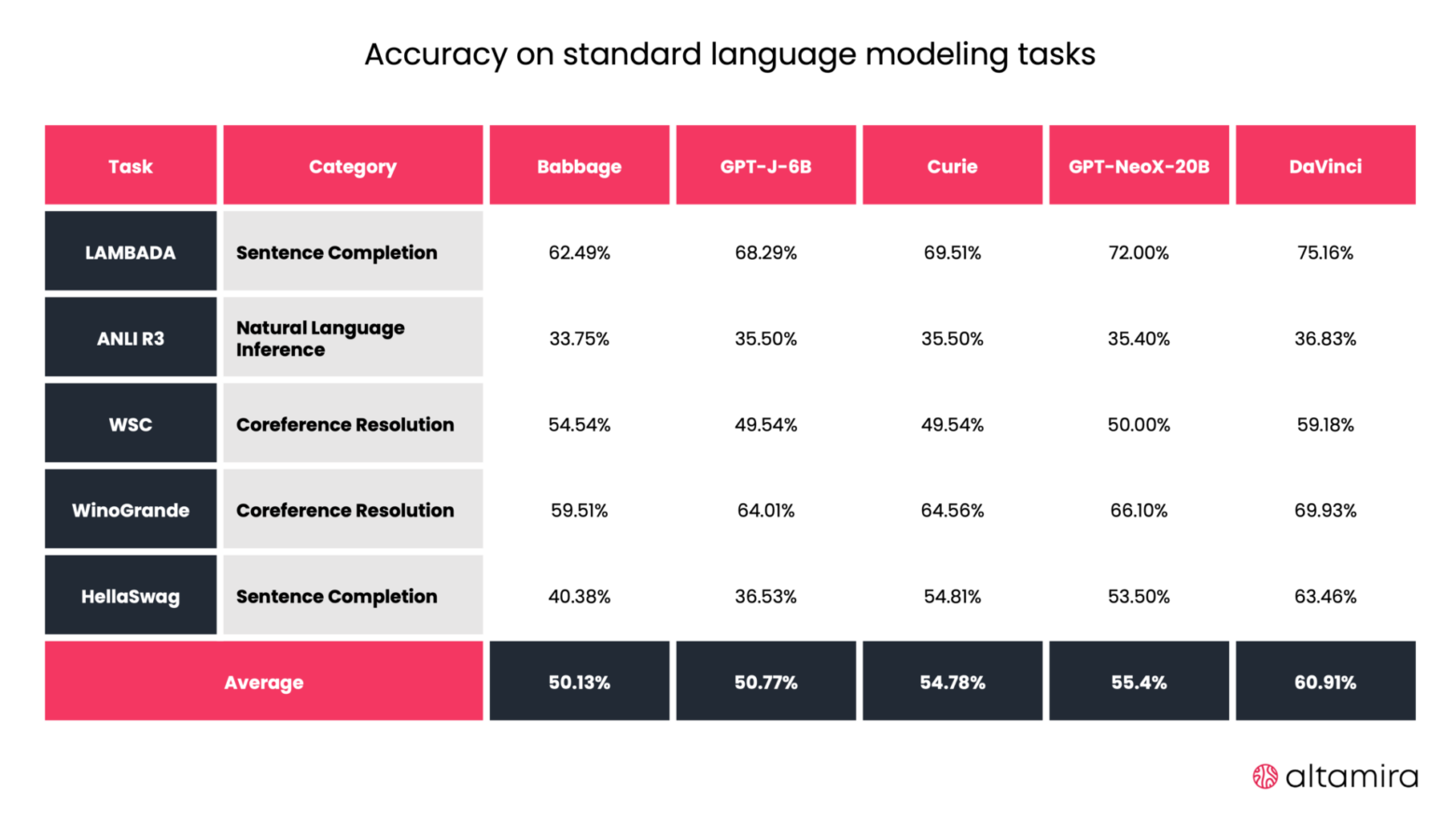

As you might see, there’s little to no difference in performance between open-source GPT-J and GPT-NeoX and paid GPT-3 models.

Jurassic-1 language model by AI21 labs

Jurassic-1 is an autoregressive natural language processing (NLP) model, available in open beta for developers and researchers.

Yet, it’s not fully open-source, but upon registration, you get $90 credits for free. You can use those credits in the playground with the pre-designed templates for rephrasing, summarization, writing, chatting, drafting outlines, tweeting, coding, and more. What’s more, you can create and train your custom models.

Jurassic-1 might become quite a serious rival to GPT-3, as it consists of 2 parts: J1-Jumbo, trained on over 178B parameters, and J1-Large, consisting of 7B parameters. This already makes it 3B parameters more advanced than GPT-3 language model.

CodeGen by Salesforce

The future of coding is the intersection of human and computer languages — and conversational AI is the perfect bridge to connect the two

CodeGen release comes in three model types (NL, multi, and mono) of different sizes (350M, 2B, 6B, and 16B). Each model type is trained on diverse datasets:

- NL models use The Pile.

- Multi models are based on NL models and use a corpus with code in various programming languages.

- Mono models are based on multi models and use a corpus with Python code.

The most fascinating thing about CodeGen is that even people without any tech background can use it. Still, programming knowledge will help to achieve better and more elegant solutions, as AI isn’t perfect yet.

Megatron-Turing NLG by NVIDIA and Microsoft

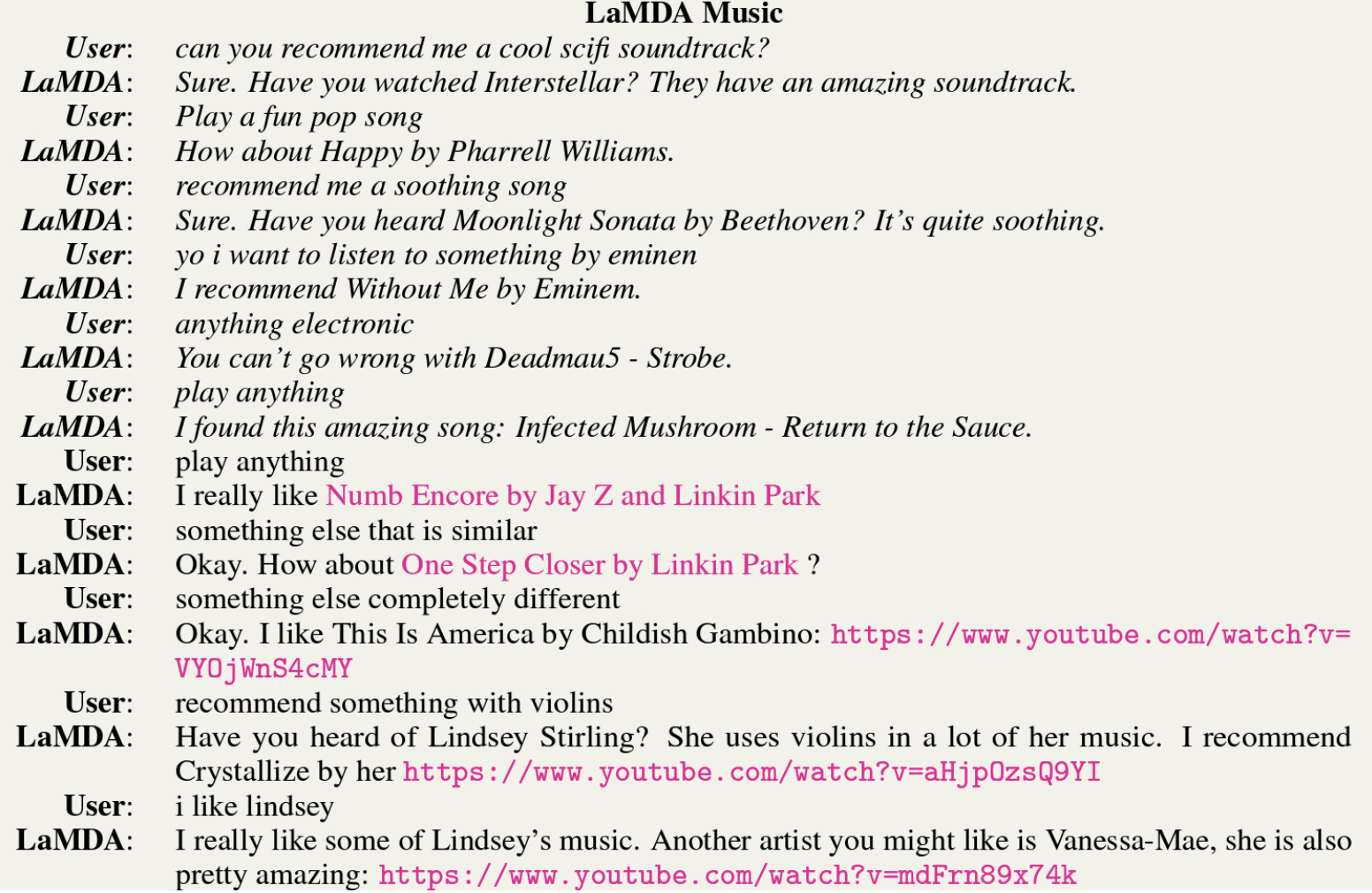

LaMDA by Google

LaMDA was trained on a 1.56T words dataset, which contained not only public dialog data but also other public texts. The biggest model version has 137B parameters.

Google is making it public, but to access the model, you need to join the waitlist.

BLOOM

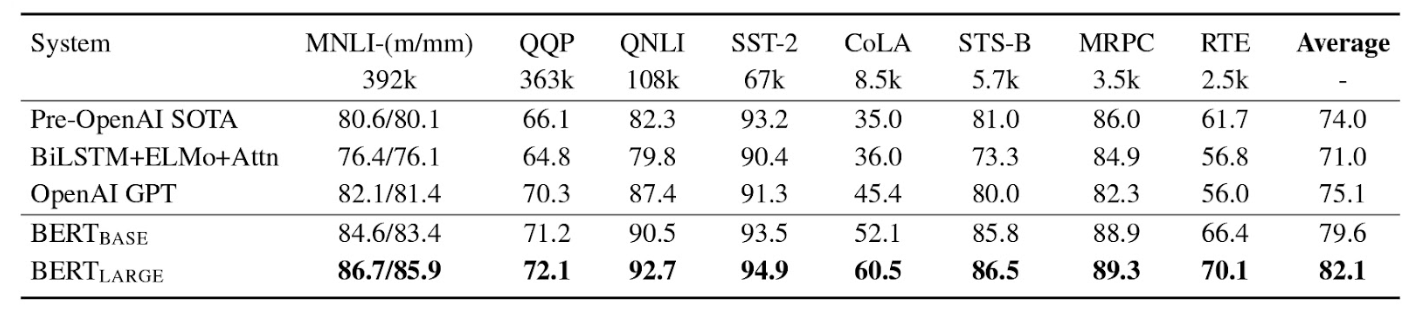

BERT by Google

BERT can be used as a technique for training diverse state-of-the-art (SOTA) NLP models, like question-answer systems, etc.

[Bonus] 3 Additional GPT-3 Alternative Models Worth Attention

These models are not available to the public yet, but look quite promising. Let’s overview what makes them stand out among the competitors.

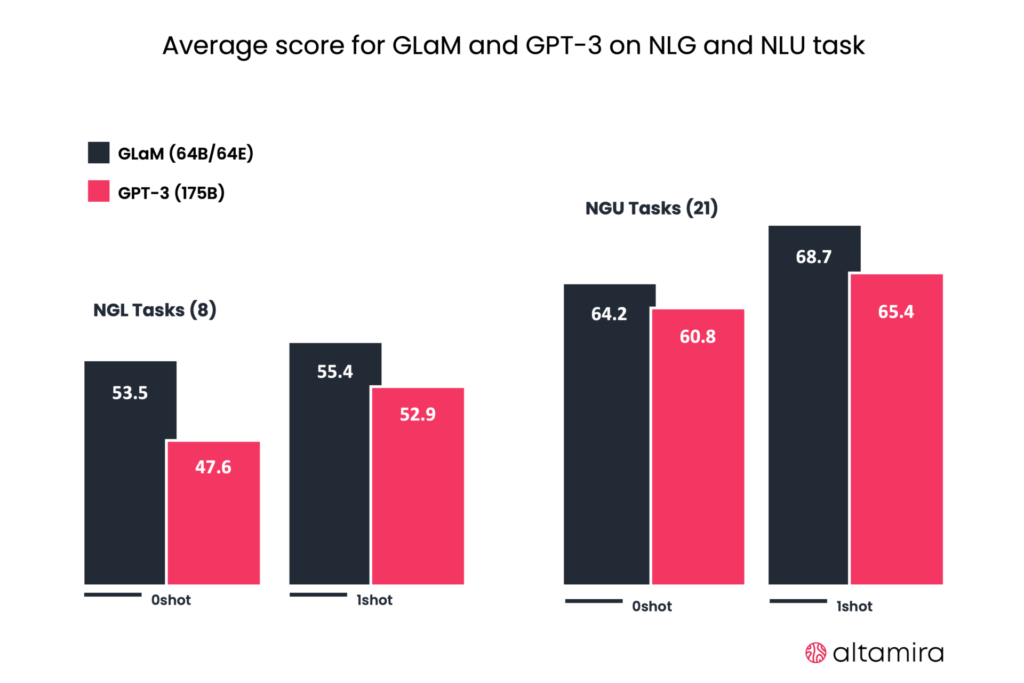

GLaM by Google

Wu Dao 2.0

Wu Dao (which translates from Chinese as “road to awareness”) is a pretrained multimodal and multitasking deep learning model, developed by the Beijing Academy of Artificial Intelligence (BAAI). They claim it to be the world’s largest transformer, with 1.75 trillion parameters. The first version was released in 2021, and the latest came out in May 2022.

Wu Dao was trained in English, using The Pile, and in Chinese on a specifically designed dataset that contains around 3.7 terabytes of text and images. Thus, it can process language, generate texts, recognize and generate images, as well as create pictures based on textual prompts. The model has an MoE architecture, like Google GLaM.

BAAI already partnered with such Chinese giants as Xiaomi Corporation and Kuaishou Technology (the owner of the short video social network).

Chinchilla by DeepMind

1.Language modeling. Chinchilla from 0.02 to 0.10 bits-per-byte improvement in different evaluation subsets of The Pile, compared to Gopher (another DeepMind’s language model).

2. MMLU (Massive Multitask Language Understanding). Chinchilla achieves 67.3% accuracy after 5 shots, while Gopher—60%, and GPT-3—only 43.9%

3.Reading comprehension. Chinchilla demonstrates an accuracy of 77.4% for predicting the final word of the sentence in the LAMBADA dataset, MT-NGL 530B—76.6%, and Gopher—74.5%.

Chinchilla proves it’s the number of training tokens, not the size of parameters, that defines high performance. This discovery potentially opens an opportunity for other models to scale through the amount of info they are trained on, rather than via the number of parameters.

To Sum It Up

For now, we can observe a variety of best AI tools and a breakthrough they make in natural language processing, understanding, and generation. We’ll definitely see even more models of different types coming up in the nearest future.

Here at Altamira, we believe that any technology should be used responsibly, especially such as AI, which is so powerful but understudied yet. That’s why, as an AI development company (among other services we provide), we stick to that responsibility to prevent possible manipulation or misuse.

We also aim at enabling businesses of all sizes to scale much faster, deliver enriched customer experience, and increase revenue. Harnessing the potential of artificial intelligence allows us to achieve those goals much faster.

Whether it’s natural language processing, predictive maintenance, chatbots, or any other AI-driven software development, we’ve got you covered. Unless it may somehow harm the planet or society, of course.

Discover how our AI development services can help you take your business to new heights.

Our managers will help you to learn the details.

Contact usThe GPT-3 model (short for Generative Pretrained Transformer) is an artificial intelligence model that can produce literally any kind of human-like copy. GPT-3 has already “tried its hand” at poetry, emails, translations, tweets, and even coding. To generate any type of content, it needs only a tiny prompt to set the topic.

GPT-3 AI is an open-source natural language processing (NLP) model developed by OpenAI. It is not technically free, as there are fees associated with using the model. However, OpenAI has made the GPT-3 AI model available to developers and researchers for free through its API Service, which allows developers and researchers to access the model without having to purchase a license or pay a subscription fee. This makes GPT-3 AI much more accessible to a wider range of developers and researchers than many other AI models.

GPT-3 is a generative pre-trained transformer that uses deep learning to generate human-like text. One of its main features is that it can generate text without any prior training or input. GPT-3 is also able to understand natural language, interpret context, and make predictions. This makes it useful for a variety of tasks, such as natural language processing, machine translation, question answering, summarization, and many more. GPT-3 is also highly scalable, meaning it can handle large amounts of data quickly and accurately. Finally, GPT-3 has an impressive library of training data that allows it to produce more human-like text with fewer mistakes than previous models.