Table of Contents

Every day we are getting closer to self-driving cars, which is no longer a fantastic future, but a reality. Every year the automotive industry continues to develop more and more automation options for their vehicles. Based on the plague’s events, we can safely say that the future belongs to crewless vehicles. But it is worth noting that many studies show that people are not ready to transfer control of the car to 100%, and this is not surprising, because so far, no one can guarantee for sure the safety of these vehicles.

A self-driving vehicle (DC) is a vehicle capable of maneuvering on the road without human intervention but using several complex subsystems and devices for space orientation. Self-driving technology has been in development since 2009, and in 2013 by the US Department of Transportation’s National Highway Traffic Safety.

Autonomy and its levels

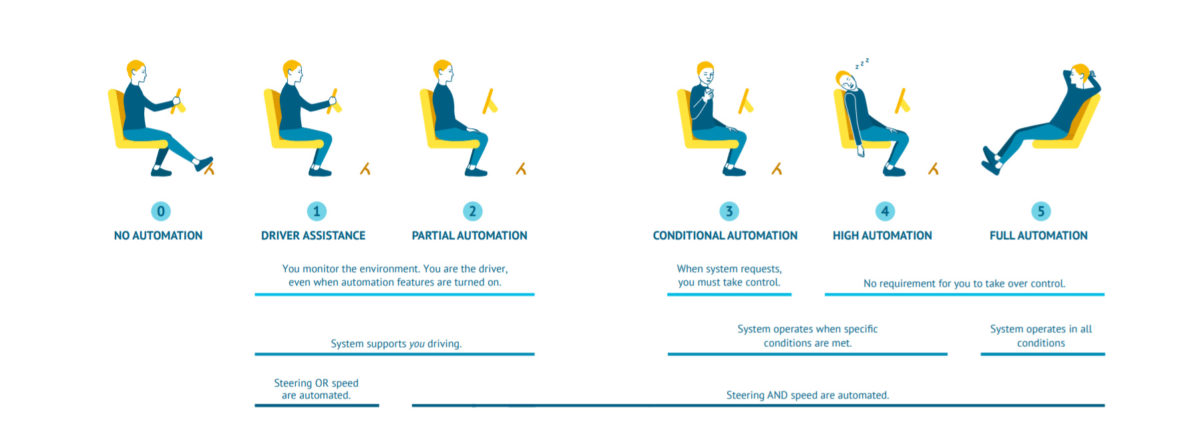

The Administration (NHTSA) has defined different autonomous driving levels, officially including them in its policy in 2016. Level 0 is a fully driver-driven vehicle. Levels 1 through 3 are semi-autonomous, and levels 4 and 5. are fully autonomous vehicles. Level 5 means the car can do everything a human driver can do. Many automakers say the technology will dawn in 2021.

Where did the automation come from? It began with remote engine start, reverse sensors, and various maintenance alerts. Today, adaptive cruise control, a speedometer display on the windshield, and a lane change assist are available in many cars. Such developments have contributed to the development of a fully autonomous vehicle (AV).

The autonomy of a car depends on the environment in which it will be used. Moreover, environmental factors mean more than just the quality of the expensive, the saturation of traffic, and the cultural characteristics of society. For example, let’s take a freeway. A decision must be made in a few milliseconds when obstacles are detected, while the same decision can be much slower for a car that runs through the corporate territory.

The vehicle’s capabilities determine the area of detailed design (ODD). ODD defines the conditions under which a vehicle can and should operate safely. ODD includes:

- ecological,

- geographic restrictions and time of day restrictions,

- characteristics of traffic or carriageway.

For example, an autonomous cargo vehicle might be designed to carry cargo from point A to point B 50 km away along a specific route during daytime only. Such an optical drive vehicle is limited by the prescribed path and time of day.

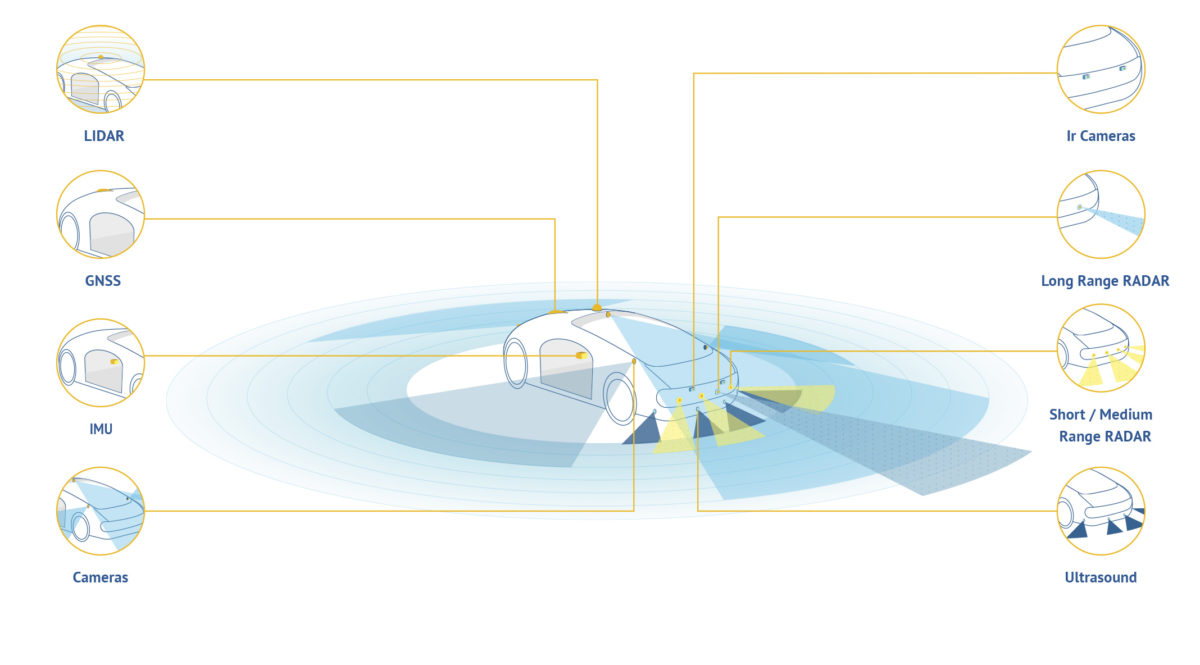

Many car manufacturers, including Google (Waymo), are developing and improving AV. The design, of course, is different for everyone. Still, all these cars use a set of sensors to perceive the environment, software to process input data and determine the car’s trajectory, and a set of mechanisms for making decisions.

Sensing

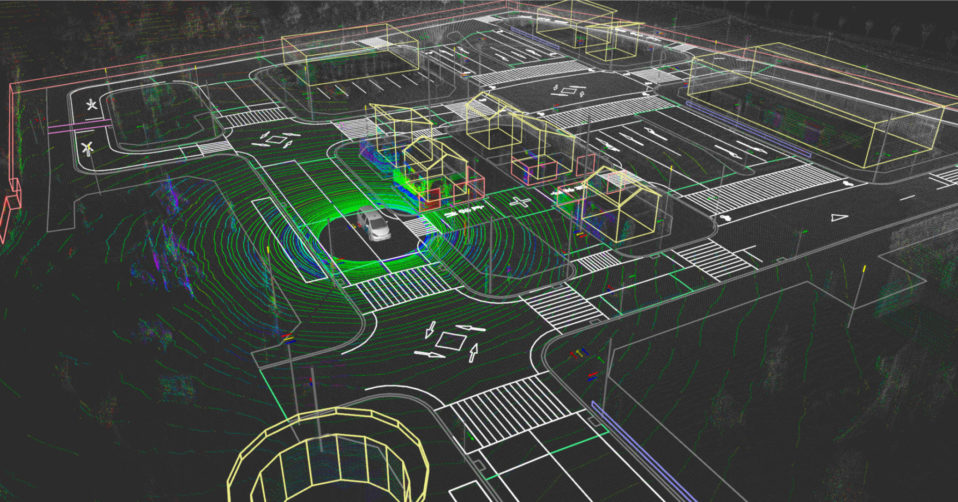

For an autonomous vehicle to function normally, it needs to simultaneously build a map of this environment and localize itself on the map. The input to perform this simultaneous localization and mapping (SLAM) process must come from sensors and pre-existing maps created by AI systems and humans.

Navigation

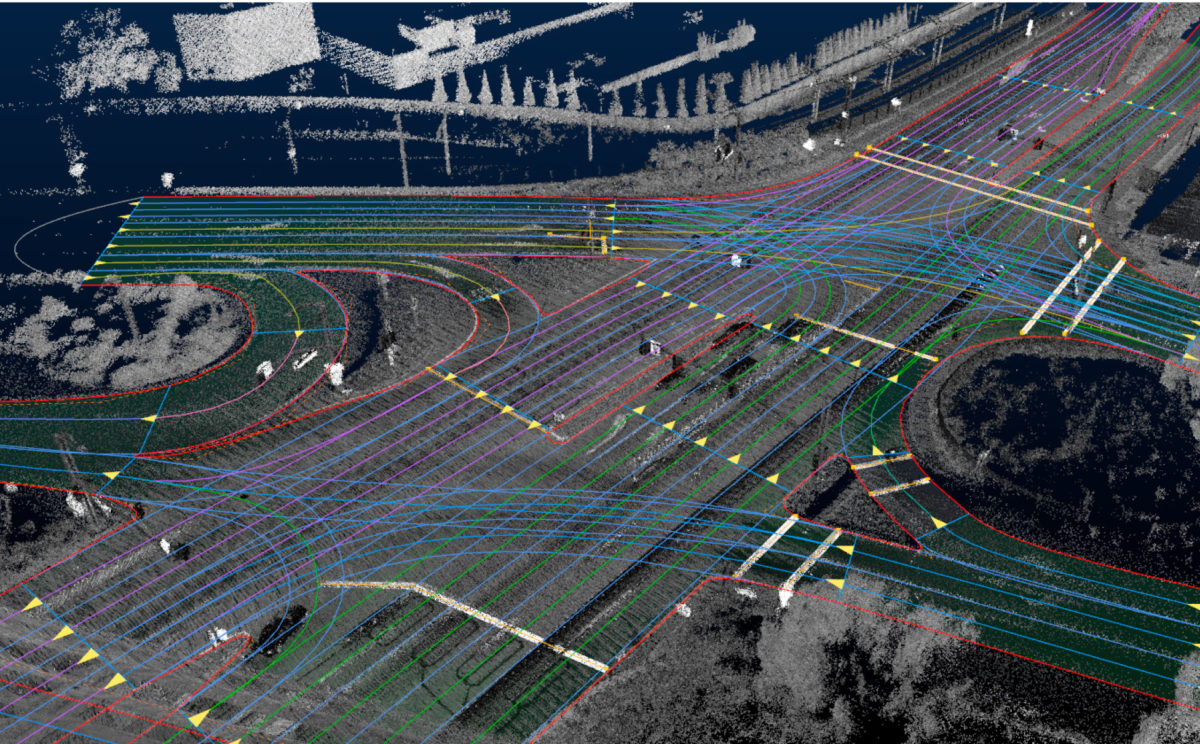

To fully navigate the terrain, map services are actively used today. But autonomous vehicles require high definition (HD) maps that are two orders of magnitude more detailed than what we are used to. With an accuracy of decimetres or less, HD maps increase the spatial and contextual awareness of autonomous vehicles and provide redundancy for their sensors.

Using triangulation and HD maps, you can accurately determine the location of the car at the moment.

An equally important aspect of using detailed maps is that it narrows the range of information that needs to be received by the vehicle’s sensing system and allows sensors and software to exert more effort to move objects.

HD maps help driverless vehicles by providing information about lanes, road signs, and other objects located along the road. Typically, these maps are layered, and at least one layer contains high-level 3D geometric information about the world to enable accurate calculations.

High definition maps are difficult and time-consuming to create, and also require a lot of storage and bandwidth to store and transfer the maps.

Thinking and learning

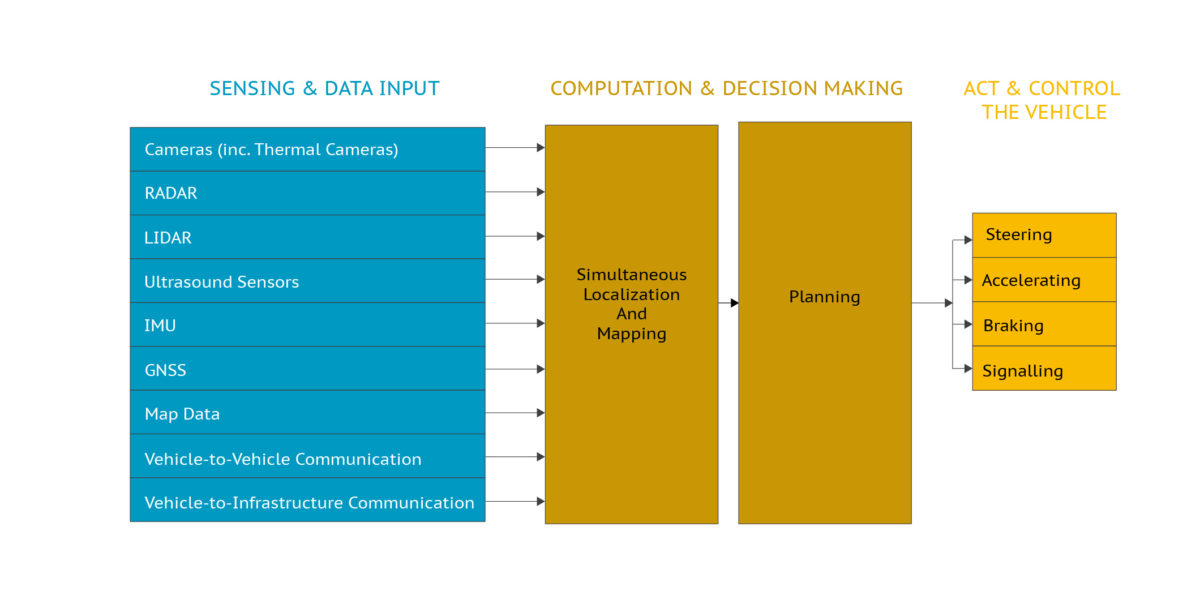

Using raw data from sensors installed on the AV and those maps that have been loaded into the system, the automated driving system builds a map of its environment while simultaneously tracking its location in it.

Simultaneous Localization and Mapping (SLAM) algorithms allow you to do all of this. After determining the location, the system itself plans a route from one point to another.

SLAM and Sensor Fusion

SLAM is a complex process because it requires a map to locate and a reasonable position estimate to display. To accurately do its job, SLAM uses a combination of sensors, or rather data from multiple sensors and databases, to obtain more accurate information. This complex process deals with the association, correlation, and combination of data. This approach allows you to get less expensive, better quality information than using only one data source.

To start the movement after processing the information, two AI approaches are used:

# 1 Sequence. A management process in which a hierarchical pipeline is decomposed into components. In other words, each subsequent action (probing, localization and mapping, path planning) is analyzed by a particular software element, with each component of the pipeline passing data to the next.

# 2 An integrated approach. It is based on deep learning that works with all of these functions at the same time.

Engineers and developers still have not agreed on which approach is better. But today, the first approach, which implies a sequence of actions, is considered more acceptable.

End-to-end learning (e2e) is gaining increasing interest as a potential solution to complex artificial intelligence systems’ challenges for autonomous vehicles. End-to-end (e2e) learning applies iterative learning to a complex system as a whole and has been popularized in the context of deep learning. The end-to-end approach attempts to create an autonomous driving system with one comprehensive software component that directly maps sensor inputs to driving actions. Advances in deep learning have expanded the capabilities of e2e systems and are now considered a viable option. (Wevolver report)

To understand how an autonomous vehicle decides to start moving, let’s look at how data from sensors is processed. Of course, it is worth considering the types of sensors onboard the vehicle, since the use of the software circuit for processing information from these same sensors depends on this.

Cars use several algorithms to recognize objects in an image. The first approach is object boundary detection, which evaluates changes in light intensity or color in different pixels.

LIDAR data can be used to calculate the movement of a vehicle in the same way. But both approaches are computationally intensive and difficult to scale for an autonomous vehicle in an ever-changing environment.

Therefore, developers began to implement machine learning, which works with computer algorithms and performs analysis based on existing data.

Machine learning methods

Modern software development for self-driving cars uses different types of machine learning algorithms. Machine learning matches a set of inputs to a group of outputs based on the provided training set. Convolutional Neural Networks (CNN), Recurrent Neural Networks (RNN), and Deep Reinforcement Learning (DRL) are the most common deep learning methodologies applied for autonomous driving.

Data collection

Before using algorithms, you need to train them on datasets that contain scenarios for the development of events that are close to reality. In any machine learning process, all data is divided into 2 parts: one part is used for training, the second part for verification (testing).

Many datasets with semantic segmentation of street objects, classification of signs, detection of pedestrians are already used by many companies.

One way to collect data is to use a prototype vehicle and a driver who will drive it. With the help of the sensor, all actions (pedaling, steering, etc.) are recorded.

There is also a second option where simulators are used. By creating realistic simulations, software companies can make thousands of virtual scenarios.

Pandemic impact on driverless vehicles

Many experts forecast the pandemic like COVID-19 should speed up the adoption of driverless vehicles for passenger transportation, delivering goods, and more. The cars still need disinfection, and most of the companies do it manually with sanitation teams. In the case of an outbreak, driverless cars might minimize the risk of spreading disease.

Waymo, Uber, GM’s Cruise, Aurora, Argo AI, and Pony.ai are among the companies that have suspended driverless vehicle programs in the hopes of limiting contact between drivers and riders. It’s a direct response to the ongoing health crisis caused by COVID-19, which has sickened over 250,000 and killed more than 10,000 people worldwide.

There are many examples of using driverless cars in China, like delivering groceries, transport medical aids, and even deliver meals to isolation areas and those who are testing positive for the virus. At the Clinic in Jacksonville, Florida, the USA, four self-driving NAVYA shuttles are being used to move medical supplies and COVID-19 tests samples from a testing center to the nearest laboratory. To minimize close contact and reduce the spread of the virus, the companies are adopting autonomous vehicles to deliver orders and meet their customers’ needs.

Сar manufacturers have been trying for a long time to persuade us of the benefits of driverless cars. But who knew it would take a global pandemic to reveal the real-life benefits of autonomous driving:

- reliable option to prevent person-to-person infection. On-demand services like Instacart has taken actions to minimize human contact. The main reason for the driverless cars usage is that they don’t require a potentially sick person as a driver.

- automated vehicles can operate independently around the clock with no need for human-to-human contact, making them perfect solutions for providing delivery services in virus-hit areas.

- save humans lives by the implications that driverless cars could have on auto insurance rates are mostly positive. With new driverless and connected vehicle technology, the risk for traffic-related accidents will vastly decrease. The latest technologies will remove the human element caused by around 90 percent of all traffic accidents.

- less traffic. Automating driving would also bring with it the benefit of reduced traffic. As connected vehicles and driverless vehicles communicate with each other and their surroundings, they are able to identify the optimum route, which helps spread demand for scarce road space.

- another plus is that they don’t require monitoring, while you can still ensure their efficiency.

- reduced emissions. Study shows that even using autonomous taxis could reduce greenhouse gas emissions by 87 to 94% per mile by the year 2030.

The potential benefits of driverless cars are attractive and widespread, and the future for the industry is bright. If we let them, they could be the biggest thing to happen to transportation since Henry Ford’s assembly line.

Conclusion

In our time, the development of autonomous vehicles has reached such a level that they can drive without human intervention, but still under certain conditions. From classification, this is level 4, or a high degree of automation, yet developers face many tasks that they have to solve.

No technology is yet capable of providing Level 5, which is complete automation. The most common automated personal vehicles on the market operate at Level 2, where the human driver still needs to track and decide when to take over. The main problem lies in the environment that will surround the self-driving car.